Play music with speaker

Welcome to this tutorial about speakers! If you've ever listened to music, watched a movie, or even heard the sound of your own voice through a microphone, you've encountered a speaker. In this tutorial, you'll explore the components of a speaker, how they work, and how to use them in your own projects. Let's get started!

Learning goals

- Learn how a speaker produces different sounds.

- Understand the difference between buzzer and speaker.

- Have a general idea of I2S protocol.

- Know about different sound waveforms.

- Learn about audio sampling.

- Realize the difference between WAVE and MP3 files.

🔸Circuit - Speaker

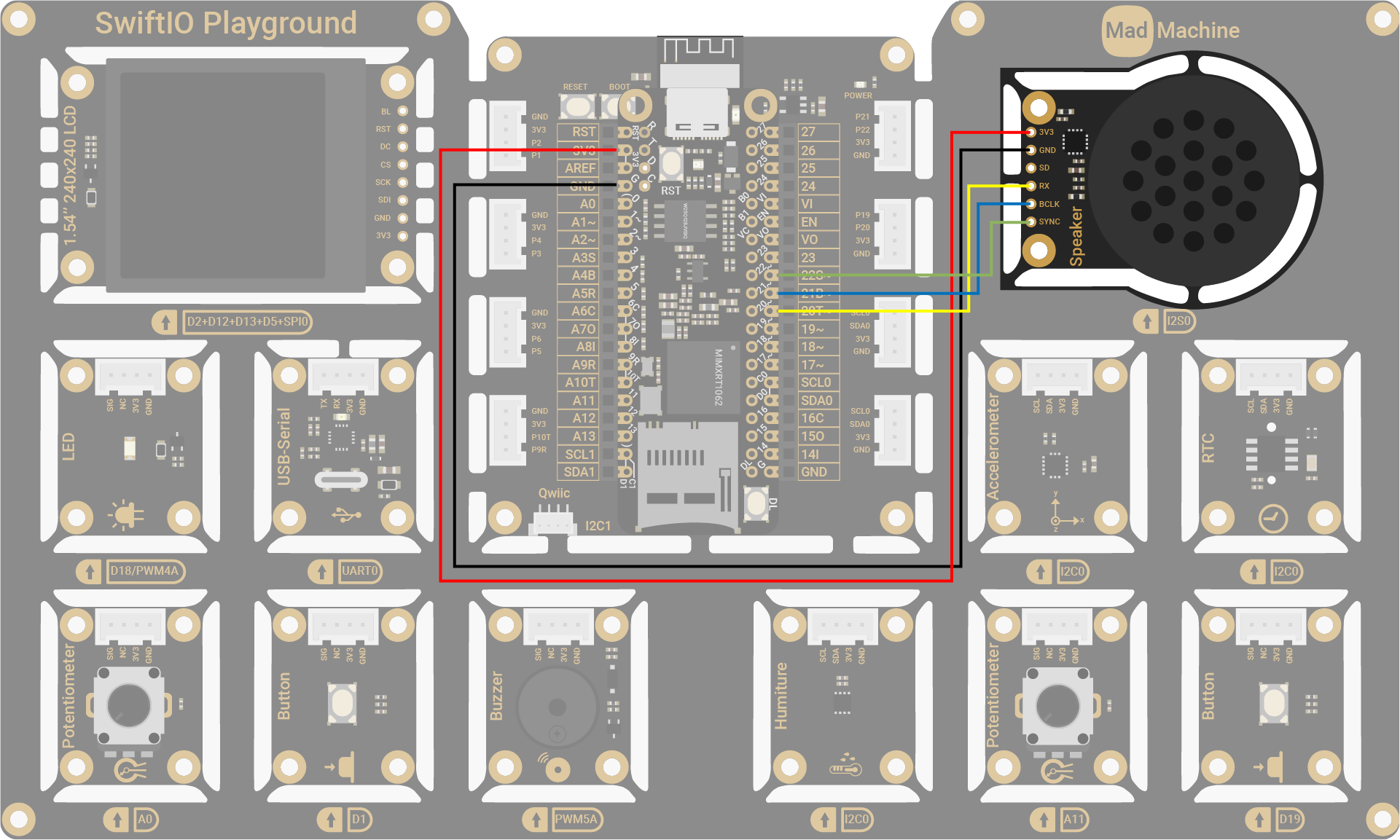

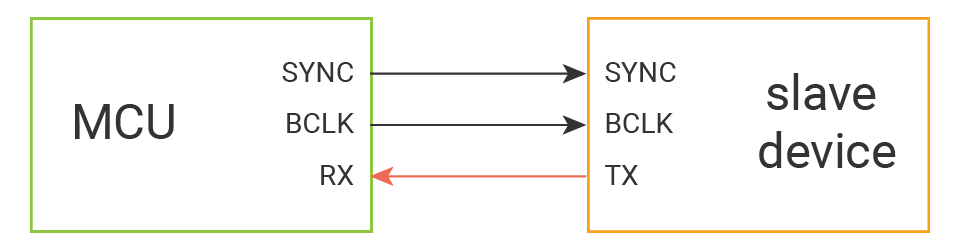

The speaker connects to the chip MAX98357. And the chip connects to I2S0 (SYNC0, BCLK0, TX0).

| Speaker Pin | SwiftIO Micro Pin |

|---|---|

| 3V3 | 3V3 |

| GND | GND |

| SD | - |

| RX | I2S0 (TX) |

| BCLK | I2S0 (BCLK) |

| SYNC | I2S0 (SYNC) |

The circuits above are simplified versions for your reference. Download the schematics here.

By connecting the pin SD on the Speaker module to GND directly, you can set the MAX98357 into shutdown mode.

🔸Projects

1. Playing scales

Different waveforms can generate different sounds. In this project, you will generate a square wave and a triangle wave manually. Then play scales using two sounds.

Example code

You can download the project source code here.

// Import the SwiftIO library to control input and output.

import SwiftIO

// Import the MadBoard to use the id of the pins.

import MadBoard

// Initialize the speaker using I2S communication.

// The default setting is 16k sample rate, 16bit sample bits.

let speaker = I2S(Id.I2S0)

// The frequencies of note C to B in octave 4.

let frequency: [Float] = [

261.626,

293.665,

329.628,

349.228,

391.995,

440.000,

493.883

]

// Set the samples of the waveforms.

let sampleRate = 16_000

let rawSampleLength = 1000

var rawSamples = [Int16](repeating: 0, count: rawSampleLength)

var amplitude: Int16 = 10_000

while true {

let duration: Float = 1.0

// Iterate through the frequencies from C to B to play a scale.

// The sound waveform is a square wave, so you will hear a buzzing sound.

generateSquare(amplitude: amplitude, &rawSamples)

for f in frequency {

playWave(samples: rawSamples, frequency: f, duration: duration)

}

sleep(ms: 1000)

// Iterate through the frequencies from C to B to play a scale.

// The sound waveform is a triangle wave, and the sound is much softer.

generateTriangle(amplitude: amplitude, &rawSamples)

for f in frequency {

playWave(samples: rawSamples, frequency: f, duration: duration)

}

sleep(ms: 1000)

// Decrease the amplitude to lower the sound.

// If it's smaller than zero, it restarts from 20000.

amplitude -= 1000

if amplitude <= 0 {

amplitude = 10_000

}

}

// Generate samples for a square wave with a specified amplitude and store them in an array.

func generateSquare(amplitude: Int16, _ samples: inout [Int16]) {

let count = samples.count

for i in 0..<count / 2 {

samples[i] = -amplitude

}

for i in count / 2..<count {

samples[i] = amplitude

}

}

// Generate samples for a triangle wave with a specified amplitude and store the them in an array.

func generateTriangle(amplitude: Int16, _ samples: inout [Int16]) {

let count = samples.count

let step = Float(amplitude) / Float(count / 2)

for i in 0..<count / 4 {

samples[i] = Int16(step * Float(i))

}

for i in count / 4..<count / 4 * 3 {

samples[i] = amplitude - Int16(step * Float(i))

}

for i in count / 4 * 3..<count {

samples[i] = -amplitude + Int16(step * Float(i))

}

}

// Send the samples over I2s bus and play the note with a specified frequency and duration.

func playWave(samples: [Int16], frequency: Float, duration: Float) {

let playCount = Int(duration * Float(sampleRate))

var data = [Int16](repeating: 0, count: playCount)

let step: Float = frequency * Float(samples.count) / Float(sampleRate)

var volume: Float = 1.0

let volumeStep = 1.0 / Float(playCount)

for i in 0..<playCount {

let pos = Int(Float(i) * step) % samples.count

data[i] = Int16(Float(samples[pos]) * volume)

volume -= volumeStep

}

data.withUnsafeBytes { ptr in

let u8Array = ptr.bindMemory(to: UInt8.self)

speaker.write(Array(u8Array))

}

}

Code analysis

import SwiftIO

import MadBoard

Import the SwiftIO library to set I2S communication and the MadBoard to use pin ids.

let speaker = I2S(Id.I2S0)

Initialize an I2S interface reserved for the speaker. It will have a 16k sample rate and 16-bit sample depth by default.

let frequency: [Float] = [261.626, 293.665, 329.628, 349.228, 391.995, 440.000, 493.883]

Store frequencies for note C, D, E, F, G, A, B in octave 4. That constitutes a scale, which will be played by the speaker.

let sampleRate = 16_000

let rawSampleLength = 1000

var rawSamples = [Int16](repeating: 0, count: rawSampleLength)

var amplitude: Int16 = 10_000

Define the parameters for the audio data:

- The signal is sampled at 16000 Hz, so there will be 16000 data per second.

rawSampleLengthdecides the count of samples of the generated waves in a period.rawSamplesstores the samples of the audio signal in a period. At first, all values are filled with 0 and the count is decided byrawSampleLength.amplitudeis the peak value of the wave and should be positive.

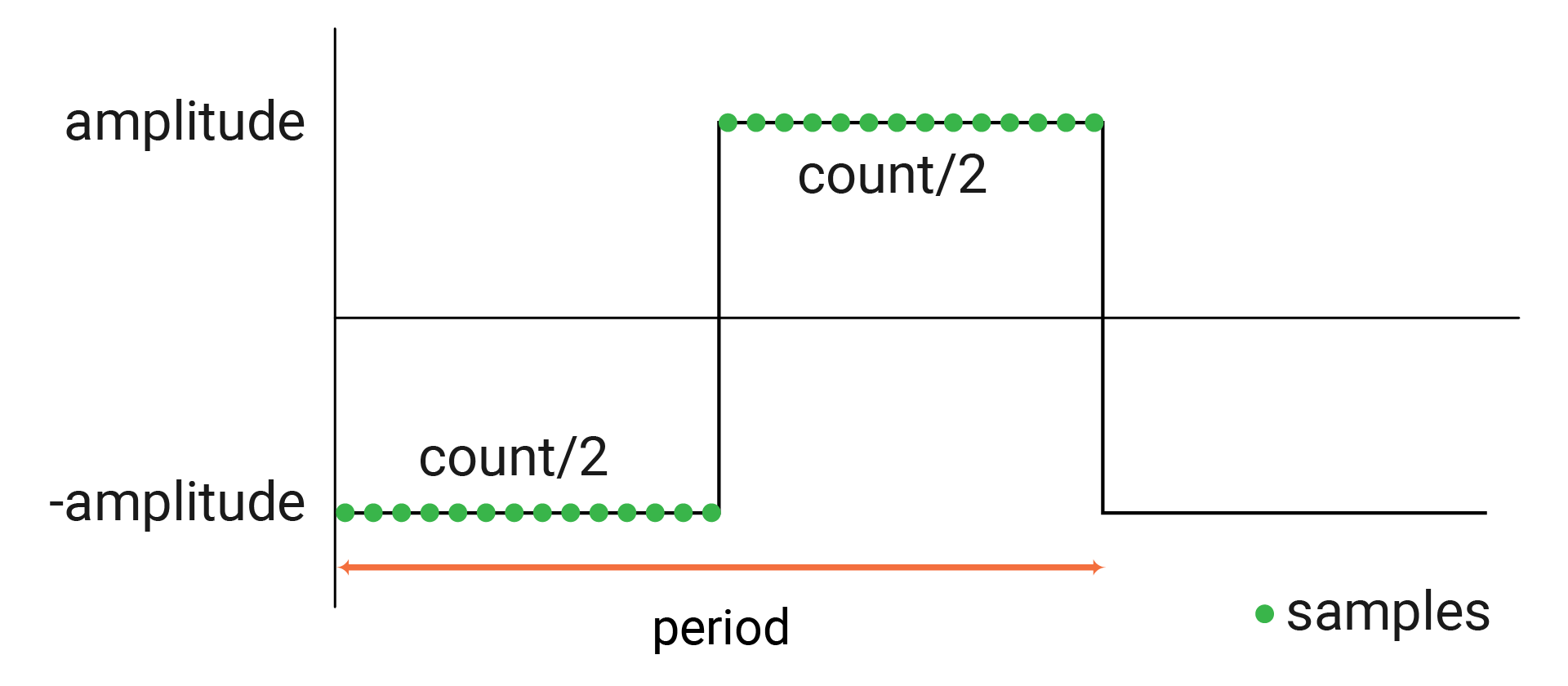

func generateSquare(amplitude: Int16, _ samples: inout [Int16]) {

let count = samples.count

for i in 0..<count / 2 {

samples[i] = -amplitude

}

for i in count / 2..<count {

samples[i] = amplitude

}

}

This newly defined function allows you to generate a periodic square wave. You only need to calculate the samples in one period. The other periods of waves will repeat these samples. The parameter samples needs an array to store the audio data, so it is set as inout to be changed inside the function.

A square wave has only two states (0 and 1), so the calculation is quite simple. The first half samples are all negative, and samples of the second half are positive. Their values are all decided by the parameter amplitude.

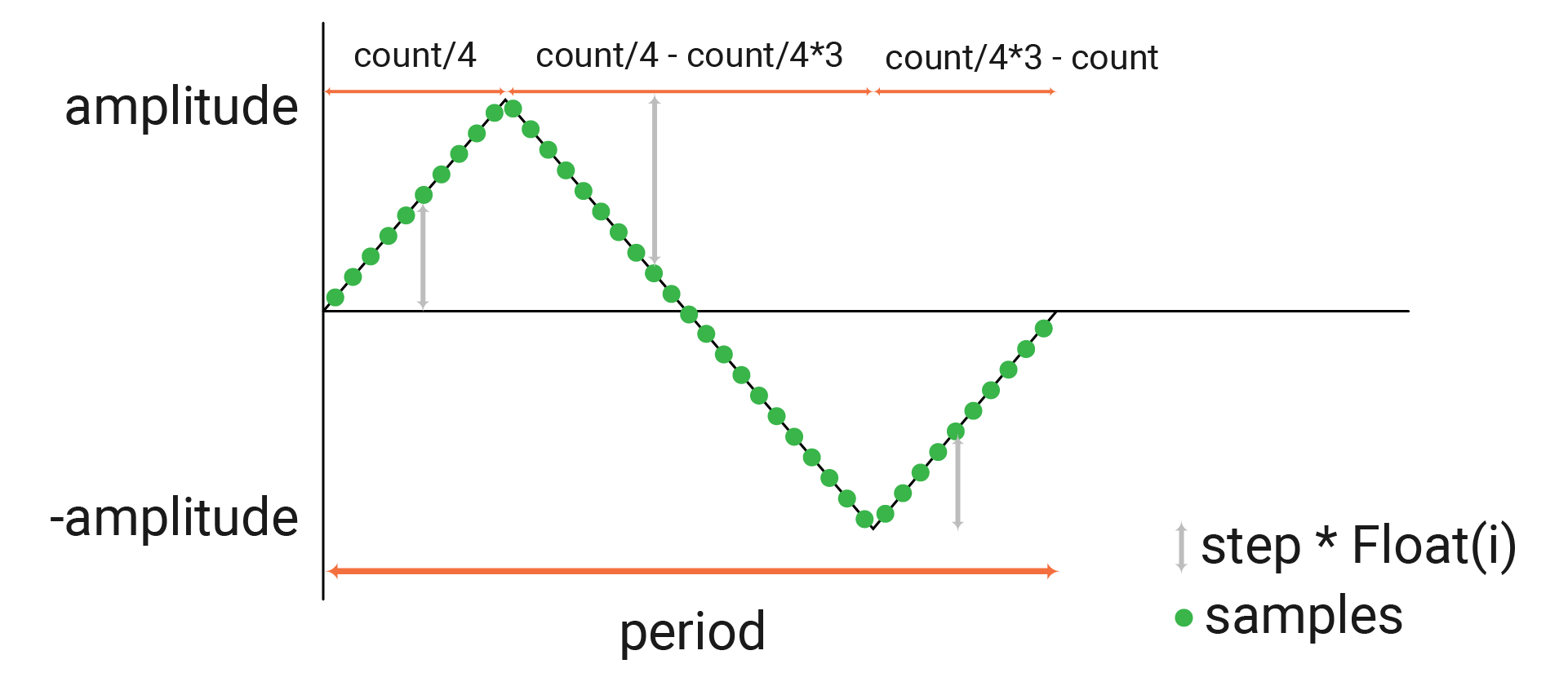

func generateTriangle(amplitude: Int16, _ samples: inout [Int16]) {

let count = samples.count

let step = Float(amplitude) / Float(count / 2)

for i in 0..<count / 4 {

samples[i] = Int16(step * Float(i))

}

for i in count / 4..<count / 4 * 3 {

samples[i] = amplitude - Int16(step * Float(i))

}

for i in count / 4 * 3..<count {

samples[i] = -amplitude + Int16(step * Float(i))

}

}

This function is used to generate samples for a triangle wave in a period. The constant count is the total of audio samples. The step is the change between two continuous samples.

The samples change linearly and are divided into three parts:

- At first, the samples gradually increase to the maximum (

amplitude). - In the second part, the samples decrease from the maximum (

amplitude) to the minimum (minusamplitude). - In the third part, the samples go up from the minimum (minus

amplitude).

func playWave(samples: [Int16], frequency: Float, duration: Float) {

let playCount = Int(duration * Float(sampleRate))

var data = [Int16](repeating: 0, count: playCount)

let step: Float = frequency * Float(samples.count) / Float(sampleRate)

var volume: Float = 1.0

let volumeStep = 1.0 / Float(playCount)

for i in 0..<playCount {

let pos = Int(Float(i) * step) % samples.count

data[i] = Int16(Float(samples[pos]) * volume)

volume -= volumeStep

}

data.withUnsafeBytes { ptr in

let u8Array = ptr.bindMemory(to: UInt8.self)

speaker.write(Array(u8Array))

}

}

This function sends the samples to audio devices over an I2S bus.

-

playCountcalculates the total amount of samples.sampleRateis the amount of samples in 1s, anddurationis a specified time in seconds. If the note duration is 2 seconds and the sample rate is 16000Hz, the sample count equals 32000. -

The array

datais used to store the audio data for the speaker. All elements are 0 by default, whose count equals the count of samples calculated before. -

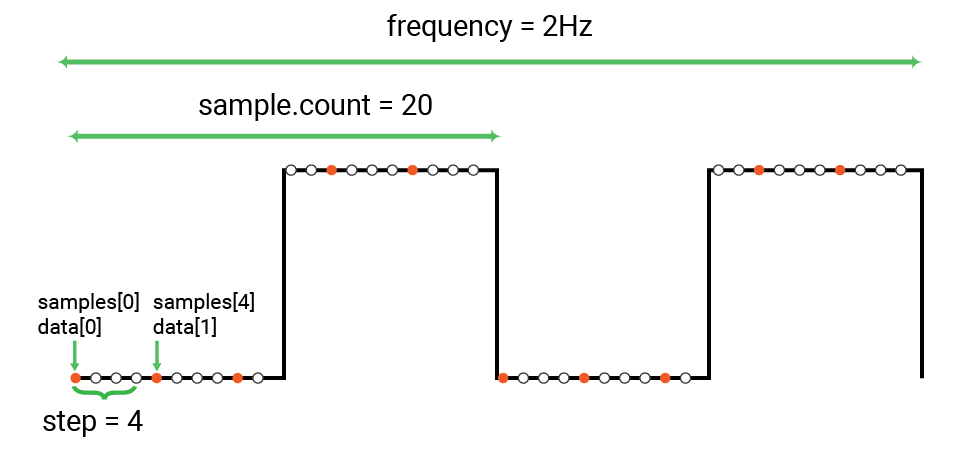

To better understand the constant

step, assuming a square wave that has 20 samples in a period. Its frequency is 2Hz. Therefore, there will be 40 samples in total in one second. If the audio sample rate is at 10Hz, it needs only 10 samples in one second. So you can choose some of the samples: 1 sample every 4 samples, like samples[0], samples[4]... So the step here is 20 * 2 / 10 = 4.

-

volumeandvolumeStepare used to reduce the volume of each note, so it sounds more natural. You could delete the statementvolume -= volumeStepand see how it sounds. If theplayCountis 10, thevolumewill be 1, 0,9, 0.8... for each data to fade out the sound. -

In the

for-inloop, you will store the desired samples into the arraydata.posgets the index of the sample insamples. In the wave above, theposis 0, 4, 8, 12, 16. Whenposequals 20, it refers to the first sample in the next period. The samples are the same with those in the first peropd, so it restarts from 0. After the samples are multiplied by volume, you get gradually decreased sound. -

Send the data using I2S communication so that the speaker plays the note.

while true {

let duration: Float = 1.0

generateSquare(amplitude: amplitude, &rawSamples)

for f in frequency {

playWave(samples: rawSamples, frequency: f, duration: duration)

}

sleep(ms: 1000)

generateTriangle(amplitude: amplitude, &rawSamples)

for f in frequency {

playWave(samples: rawSamples, frequency: f, duration: duration)

}

sleep(ms: 1000)

amplitude -= 1000

if amplitude <= 0 {

amplitude = 10_000

}

}

In the while loop, the speaker will play scales over and over again.

- At first, the samples are generated from a square wave. Then use these samples to play a scale. So the sound is like what you hear from a buzzer.

- After that, the samples are from a triangle wave. So the sound is softer and clearer.

- The

amplitudedecreases to turn down the speaker. The sound will be lower after each while loop until it reaches the minimum. Thenamplitudeincrease to the maximum and repeats the variation.

2. Music player

Play music using the speaker. You can also pass other scores to play the music.

Music notes

Let's explore some common concepts in music together.

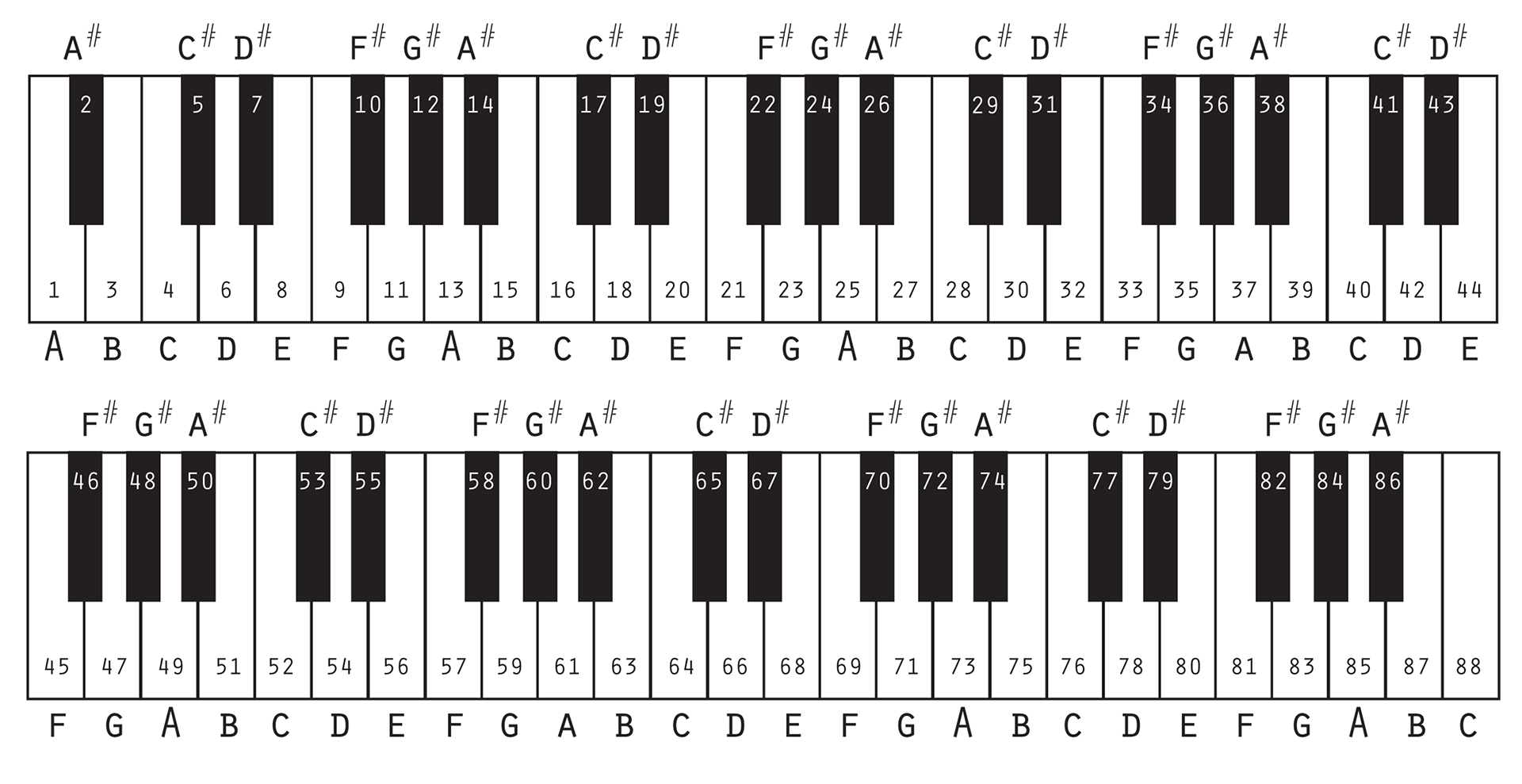

A standard piano keyboard typically consists of 88 keys, ranging from A0 to C8. Each key represents a different musical pitch or frequency from low to high.

A half step, or semitone, is the smallest interval between notes. The interval between the key A and key A# is a half step. Two half steps constitute a whole step, like the interval between A and B.

Beat is a basic unit of time in music. When you tap your toes along with a song, you actually follow its beat.

In music, all beats are divided into multiple sections, called measures or bars. A measure usually consists of several beats.

A quarter note is the common note length in music and has one beat. Then other notes are based on it: a half note has two beats, a whole note has four beats, an eighth note has a half beat, etc.

Time signature describes the count of beats in a measure and tells which note serves as one beat. 4/4 time signature is the most widely used. In this case, a measure has 4 beats, and a quarter note is one beat, an eighth note is one half beat, etc. 2/4 means 2 beats per measure and a quarter note serves as one beat. You could learn more about it here.

BPM, or beat per minute, measures the tempo of a piece of music. For example, 60 BPM means 60 beats in a minute, and each one lasts 1 second.

Project overview

- The score stores the frequency and duration of each note.

- Generate the samples by calculating the sine value at each phase.

- To create a fading effect, the amplitude values of the last samples of each note are decreased gradually over a specified fading duration.

- If there are multiple tracks, the samples from each track can be averaged at specified time intervals.

- Then send the samples to the speaker using I2S communication.

Example code

You can download the project source code here.

- main.swift

- Player.swift

- Note.swift

- Mario.swift

// Play a song using a speaker.

import SwiftIO

import MadBoard

// The sample rate of I2S and Player should be the same.

// Note: the speaker needs stereo channel but only uses the samples of left channel.

// And the frequencies below 200Hz may sound a little fuzzy with this speaker.

let speaker = I2S(Id.I2S0, rate: 16_000)

// BPM is beat count per minute.

// Timer signature specifies beats per bar and note value of a beat.

let player = Player(speaker, sampleRate: 16_000)

player.bpm = Mario.bpm

player.timeSignature = Mario.timeSignature

// Play the music using the tracks.

player.playTracks(Mario.tracks, waveforms: Mario.trackWaveforms, amplitudeRatios: Mario.amplitudeRatios)

while true {

sleep(ms: 1000)

}

import SwiftIO

/// Play music using the given tracks.

/// The sound sample is generated based on a triangle wave and be sent using I2S protocol.

public class Player {

public typealias NoteInfo = (note: Note, noteValue: Int)

public typealias TimeSignature = (beatsPerBar: Int, noteValuePerBeat: Int)

/// Beats per minute. It sets the speed of the music.

public var bpm: Int = 60

/// The beat count per bar and the note value for a beat.

public var timeSignature: TimeSignature = (4,4)

/// Set the overall note pitch. 12 half steps constitute an octave.

public var halfStep = 0

/// Fade in/out duration for each note in second.

public var fadeDuration: Float = 0.01

let amplitude = 16383

var beatDuration: Float { 60.0 / Float(bpm) }

var sampleRate: Float

var speaker: I2S

var buffer32 = [Int32](repeating: 0, count: 200_000)

var buffer16 = [Int16](repeating: 0, count: 200_000)

/// Initialize a player.

/// - Parameters:

/// - speaker: An I2S interface.

/// - sampleRate: the sample count per second. It should be the same as that of I2S.

public init(_ speaker: I2S, sampleRate: Float) {

self.speaker = speaker

self.sampleRate = sampleRate

}

/// Calculate and combine the data of all tracks, and then play the sound.

///

/// - Parameters:

/// - tracks: different tracks of a piece of music. A track consists of

/// notes and note value. For example, note value of a quarter note is 4,

/// note value of a half note is 2.

/// - waveforms: the waveforms for each track used to generate sound samples.

/// - amplitudeRatios: the ratios used to control the sound loudness of each track.

public func playTracks(

_ tracks: [[NoteInfo]],

waveforms: [Waveform],

amplitudeRatios: [Float]

) {

let beatCount = tracks[0].reduce(0) {

$0 + Float(timeSignature.noteValuePerBeat) / Float($1.noteValue)

}

let barCount = Int(beatCount / Float(timeSignature.beatsPerBar))

for barIndex in 0..<barCount {

getBarData(tracks, barIndex: barIndex, waveforms: waveforms, amplitudeRatios: amplitudeRatios, data: &buffer32)

let count = Int(Float(timeSignature.beatsPerBar) * beatDuration * sampleRate * 2)

for i in 0..<count {

buffer16[i] = Int16(buffer32[i] / Int32(tracks.count))

}

sendData(data: buffer16, count: count)

}

}

/// Calculate all data of the track and play the sound.

/// - Parameters:

/// - track: score of a melody in forms of notes and note value.

/// For example, note value of a quarter note is 4, note value of a half note is 2.

/// - waveform: the waveform used to generate sound samples.

/// - amplitudeRatio: the ratio used to control the sound loudness.

public func playTrack(

_ track: [NoteInfo],

waveform: Waveform,

amplitudeRatio: Float

) {

for noteInfo in track {

playNote(noteInfo, waveform: waveform, amplitudeRatio: amplitudeRatio)

}

}

/// Generate data for specified note and play the sound.

/// - Parameters:

/// - noteInfo: the notes and its note value.

/// - waveform: the waveform used to generate sound samples.

/// - amplitudeRatio: the ratio used to control the sound loudness.

public func playNote(

_ noteInfo: NoteInfo,

waveform: Waveform,

amplitudeRatio: Float

) {

let duration = calculateNoteDuration(noteInfo.noteValue)

var frequency: Float = 0

if noteInfo.note == .rest {

frequency = 0

} else {

frequency = frequencyTable[noteInfo.note.rawValue + halfStep]!

}

let sampleCount = Int(duration * sampleRate)

for i in 0..<sampleCount {

let sample = getNoteSample(

at: i, frequency: frequency,

noteDuration: duration,

waveform: waveform,

amplitudeRatio: amplitudeRatio)

buffer16[i * 2] = sample

buffer16[i * 2 + 1] = sample

}

sendData(data: buffer16, count: sampleCount * 2)

}

}

extension Player {

/// Calculate data of all notes in a bar.

func getBarData(

_ tracks: [[NoteInfo]],

barIndex: Int,

waveforms: [Waveform],

amplitudeRatios: [Float],

data: inout [Int32]

) {

for i in data.indices {

data[i] = 0

}

for trackIndex in tracks.indices {

let track = tracks[trackIndex]

let noteIndices = getNotesInBar(at: barIndex, in: track)

var start = 0

for index in noteIndices {

getNoteData(

noteInfo: track[index],

startIndex: start,

waveform: waveforms[trackIndex],

amplitudeRatio: amplitudeRatios[trackIndex],

data: &data)

start += Int(calculateNoteDuration(track[index].noteValue) * sampleRate * 2)

}

}

}

/// Calculate data of a note.

func getNoteData(

noteInfo: NoteInfo,

startIndex: Int,

waveform: Waveform,

amplitudeRatio: Float,

data: inout [Int32]

) {

guard noteInfo.noteValue > 0 else { return }

let duration = calculateNoteDuration(noteInfo.noteValue)

var frequency: Float = 0

if noteInfo.note == .rest {

frequency = 0

} else {

frequency = frequencyTable[noteInfo.note.rawValue + halfStep]!

}

for i in 0..<Int(duration * sampleRate) {

let sample = Int32(getNoteSample(at: i, frequency: frequency, noteDuration: duration, waveform: waveform, amplitudeRatio: amplitudeRatio))

data[i * 2 + startIndex] += sample

data[i * 2 + startIndex + 1] += sample

}

}

/// Get the indices of notes in the track within a specified bar.

func getNotesInBar(at barIndex: Int, in track: [NoteInfo]) -> [Int] {

var indices: [Int] = []

var index = 0

var sum: Float = 0

while Float(timeSignature.beatsPerBar * (barIndex + 1)) - sum > 0.1 && index < track.count {

sum += Float(timeSignature.noteValuePerBeat) / Float(track[index].noteValue)

if sum - Float(timeSignature.beatsPerBar * barIndex) > 0.1 {

indices.append(index)

}

index += 1

}

return indices

}

/// Send the generated data using I2S protocol to play the sound.

func sendData(data: [Int16], count: Int) {

data.withUnsafeBytes { ptr in

let u8Array = ptr.bindMemory(to: UInt8.self)

speaker.write(Array(u8Array), count: count * 2)

}

}

/// Calculate the duration of the given note value in second.

func calculateNoteDuration(_ noteValue: Int) -> Float {

return beatDuration * (Float(timeSignature.noteValuePerBeat) / Float(noteValue))

}

/// Calculate a sample at a specified index of a note.

/// The samples within fade (in/out) duration will be reduced to get a more

/// natural sound effect.

func getNoteSample(

at index: Int,

frequency: Float,

noteDuration: Float,

waveform: Waveform,

amplitudeRatio: Float

) -> Int16 {

if frequency == 0 { return 0 }

var sample: Float = 0

switch waveform {

case .square:

sample = getSquareSample(at: index, frequency: frequency, amplitudeRatio: amplitudeRatio)

case .triangle:

sample = getTriangleSample(at: index, frequency: frequency, amplitudeRatio: amplitudeRatio)

}

let fadeInEnd = Int(fadeDuration * sampleRate)

let fadeOutStart = Int((noteDuration - fadeDuration) * sampleRate)

let fadeSampleCount = fadeDuration * sampleRate

let sampleCount = Int(noteDuration * sampleRate)

switch index {

case 0..<fadeInEnd:

sample *= Float(index) / fadeSampleCount

case fadeOutStart..<sampleCount:

sample *= Float(sampleCount - index) / fadeSampleCount

default:

break

}

return Int16(sample * Float(amplitude))

}

/// Calculate the raw sample of a specified point from a triangle wave.

/// It sounds much softer than square wave.

func getTriangleSample(

at index: Int,

frequency: Float,

amplitudeRatio: Float

) -> Float {

let period = sampleRate / frequency

let sawWave = Float(index) / period - Float(Int(Float(index) / period + 0.5))

let triWave = 2 * abs(2 * sawWave) - 1

return triWave * amplitudeRatio

}

/// Calculate the raw sample of a specified point from a square wave.

/// The sound from it will sound a little sharp.

func getSquareSample(at index: Int,

frequency: Float,

amplitudeRatio: Float

) -> Float {

let period = Int(sampleRate / frequency)

if index % period < period / 2 {

return -amplitudeRatio

} else {

return amplitudeRatio

}

}

}

public enum Waveform {

case square

case triangle

}

/// The table is listed to raise note pitch more easily by changing half steps.

let frequencyTable: [Int: Float] = [

0 : 0 ,

1 : 27.5 ,

2 : 29.1352 ,

3 : 30.8677 ,

4 : 32.7032 ,

5 : 34.6478 ,

6 : 36.7081 ,

7 : 38.8909 ,

8 : 41.2034 ,

9 : 43.6535 ,

10 : 46.2493 ,

11 : 48.9994 ,

12 : 51.9131 ,

13 : 55 ,

14 : 58.2705 ,

15 : 61.7354 ,

16 : 65.4064 ,

17 : 69.2957 ,

18 : 73.4162 ,

19 : 77.7817 ,

20 : 82.4069 ,

21 : 87.3071 ,

22 : 92.4986 ,

23 : 97.9989 ,

24 : 103.826 ,

25 : 110 ,

26 : 116.541 ,

27 : 123.471 ,

28 : 130.813 ,

29 : 138.591 ,

30 : 146.832 ,

31 : 155.563 ,

32 : 164.814 ,

33 : 174.614 ,

34 : 184.997 ,

35 : 195.998 ,

36 : 207.652 ,

37 : 220 ,

38 : 233.082 ,

39 : 246.942 ,

40 : 261.626 ,

41 : 277.183 ,

42 : 293.665 ,

43 : 311.127 ,

44 : 329.628 ,

45 : 349.228 ,

46 : 369.994 ,

47 : 391.995 ,

48 : 415.305 ,

49 : 440 ,

50 : 466.164 ,

51 : 493.883 ,

52 : 523.251 ,

53 : 554.365 ,

54 : 587.33 ,

55 : 622.254 ,

56 : 659.255 ,

57 : 698.456 ,

58 : 739.989 ,

59 : 783.991 ,

60 : 830.609 ,

61 : 880 ,

62 : 932.328 ,

63 : 987.767 ,

64 : 1046.5 ,

65 : 1108.73 ,

66 : 1174.66 ,

67 : 1244.51 ,

68 : 1318.51 ,

69 : 1396.91 ,

70 : 1479.98 ,

71 : 1567.98 ,

72 : 1661.22 ,

73 : 1760 ,

74 : 1864.66 ,

75 : 1975.53 ,

76 : 2093 ,

77 : 2217.46 ,

78 : 2349.32 ,

79 : 2489.02 ,

80 : 2637.02 ,

81 : 2793.83 ,

82 : 2959.96 ,

83 : 3135.96 ,

84 : 3322.44 ,

85 : 3520 ,

86 : 3729.31 ,

87 : 3951.07 ,

88 : 4186.01

]

public enum Note: Int {

case A0 = 1

case AS0 = 2

case B0 = 3

case C1 = 4

case CS1 = 5

case D1 = 6

case DS1 = 7

case E1 = 8

case F1 = 9

case FS1 = 10

case G1 = 11

case GS1 = 12

case A1 = 13

case AS1 = 14

case B1 = 15

case C2 = 16

case CS2 = 17

case D2 = 18

case DS2 = 19

case E2 = 20

case F2 = 21

case FS2 = 22

case G2 = 23

case GS2 = 24

case A2 = 25

case AS2 = 26

case B2 = 27

case C3 = 28

case CS3 = 29

case D3 = 30

case DS3 = 31

case E3 = 32

case F3 = 33

case FS3 = 34

case G3 = 35

case GS3 = 36

case A3 = 37

case AS3 = 38

case B3 = 39

case C4 = 40

case CS4 = 41

case D4 = 42

case DS4 = 43

case E4 = 44

case F4 = 45

case FS4 = 46

case G4 = 47

case GS4 = 48

case A4 = 49

case AS4 = 50

case B4 = 51

case C5 = 52

case CS5 = 53

case D5 = 54

case DS5 = 55

case E5 = 56

case F5 = 57

case FS5 = 58

case G5 = 59

case GS5 = 60

case A5 = 61

case AS5 = 62

case B5 = 63

case C6 = 64

case CS6 = 65

case D6 = 66

case DS6 = 67

case E6 = 68

case F6 = 69

case FS6 = 70

case G6 = 71

case GS6 = 72

case A6 = 73

case AS6 = 74

case B6 = 75

case C7 = 76

case CS7 = 77

case D7 = 78

case DS7 = 79

case E7 = 80

case F7 = 81

case FS7 = 82

case G7 = 83

case GS7 = 84

case A7 = 85

case AS7 = 86

case B7 = 87

case C8 = 88

case rest = 0

}

struct Mario {

static let track0: [Player.NoteInfo] = [

// Bar1

(.E5, 16),

(.E5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C5, 16),

(.E5, 16),

(.rest, 16),

(.G5, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar2

(.C5, 16),

(.rest, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.B4, 16),

(.rest, 16),

(.AS4, 16),

(.A4, 16),

(.rest, 16),

// Bar3

(.G4, 12),

(.E5, 12),

(.G5, 12),

(.A5, 16),

(.rest, 16),

(.F5, 16),

(.G5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 8),

// Bar4

(.C5, 16),

(.rest, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.B4, 16),

(.rest, 16),

(.AS4, 16),

(.A4, 16),

(.rest, 16),

// Bar5 (3)

(.G4, 12),

(.E5, 12),

(.G5, 12),

(.A5, 16),

(.rest, 16),

(.F5, 16),

(.G5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 8),

// Bar6

(.rest, 8),

(.G5, 16),

(.FS5, 16),

(.F5, 16),

(.DS5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.GS4, 16),

(.A4, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.C5, 16),

(.D5, 16),

// Bar7

(.rest, 8),

(.G5, 16),

(.FS5, 16),

(.F5, 16),

(.DS5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C6, 16),

(.rest, 16),

(.C6, 16),

(.C6, 16),

(.rest, 16),

(.rest, 8),

// Bar8 (6)

(.rest, 8),

(.G5, 16),

(.FS5, 16),

(.F5, 16),

(.DS5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.GS4, 16),

(.A4, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.C5, 16),

(.D5, 16),

// Bar9

(.rest, 8),

(.DS5, 16),

(.rest, 16),

(.rest, 16),

(.D5, 16),

(.rest, 8),

(.C5, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar10 (6)

(.rest, 8),

(.G5, 16),

(.FS5, 16),

(.F5, 16),

(.DS5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.GS4, 16),

(.A4, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.C5, 16),

(.D5, 16),

// Bar11 (7)

(.rest, 8),

(.G5, 16),

(.FS5, 16),

(.F5, 16),

(.DS5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C6, 16),

(.rest, 16),

(.C6, 16),

(.C6, 16),

(.rest, 16),

(.rest, 8),

// Bar12 (6)

(.rest, 8),

(.G5, 16),

(.FS5, 16),

(.F5, 16),

(.DS5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.GS4, 16),

(.A4, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.C5, 16),

(.D5, 16),

// Bar13 (9)

(.rest, 8),

(.DS5, 16),

(.rest, 16),

(.rest, 16),

(.D5, 16),

(.rest, 8),

(.C5, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar14

(.C5, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.rest, 16),

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar15

(.C5, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.E5, 16),

(.rest, 2),

// Bar16 (14)

(.C5, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.rest, 16),

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar17 (1)

(.E5, 16),

(.E5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C5, 16),

(.E5, 16),

(.rest, 16),

(.G5, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar18 (2)

(.C5, 16),

(.rest, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.B4, 16),

(.rest, 16),

(.AS4, 16),

(.A4, 16),

(.rest, 16),

// Bar19 (3)

(.G4, 12),

(.E5, 12),

(.G5, 12),

(.A5, 16),

(.rest, 16),

(.F5, 16),

(.G5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 8),

// Bar20 (4)

(.C5, 16),

(.rest, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.B4, 16),

(.rest, 16),

(.AS4, 16),

(.A4, 16),

(.rest, 16),

// Bar21 (3)

(.G4, 12),

(.E5, 12),

(.G5, 12),

(.A5, 16),

(.rest, 16),

(.F5, 16),

(.G5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 8),

// Bar22

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.GS4, 16),

(.rest, 16),

(.A4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.A4, 16),

(.rest, 16),

(.rest, 8),

// Bar23

(.B4, 12),

(.A5, 12),

(.A5, 12),

(.A5, 12),

(.G5, 12),

(.F5, 12),

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar24 (22)

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.GS4, 16),

(.rest, 16),

(.A4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.A4, 16),

(.rest, 16),

(.rest, 8),

// Bar25

(.B4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.F5, 12),

(.E5, 12),

(.D5, 12),

(.C5, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar26 (22)

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.GS4, 16),

(.rest, 16),

(.A4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.A4, 16),

(.rest, 16),

(.rest, 8),

// Bar27 (23)

(.B4, 12),

(.A5, 12),

(.A5, 12),

(.A5, 12),

(.G5, 12),

(.F5, 12),

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar28 (22)

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.GS4, 16),

(.rest, 16),

(.A4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.A4, 16),

(.rest, 16),

(.rest, 8),

// Bar29 (25)

(.B4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.F5, 12),

(.E5, 12),

(.D5, 12),

(.C5, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar30 (14)

(.C5, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.rest, 16),

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar31 (15)

(.C5, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.E5, 16),

(.rest, 2),

// Bar32 (14)

(.C5, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.D5, 16),

(.rest, 16),

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar33 (1)

(.E5, 16),

(.E5, 16),

(.rest, 16),

(.E5, 16),

(.rest, 16),

(.C5, 16),

(.E5, 16),

(.rest, 16),

(.G5, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar34 (22)

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.GS4, 16),

(.rest, 16),

(.A4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.A4, 16),

(.rest, 16),

(.rest, 8),

// Bar35 (23)

(.B4, 12),

(.A5, 12),

(.A5, 12),

(.A5, 12),

(.G5, 12),

(.F5, 12),

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar36 (22)

(.E5, 16),

(.C5, 16),

(.rest, 16),

(.G4, 16),

(.rest, 8),

(.GS4, 16),

(.rest, 16),

(.A4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.A4, 16),

(.rest, 16),

(.rest, 8),

// Bar37 (25)

(.B4, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.F5, 12),

(.E5, 12),

(.D5, 12),

(.C5, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

]

static let track1: [Player.NoteInfo] = [

// Bar1

(.FS4, 16),

(.FS4, 16),

(.rest, 16),

(.FS4, 16),

(.rest, 16),

(.FS4, 16),

(.FS4, 16),

(.rest, 16),

(.B4, 16),

(.rest, 16),

(.rest, 8),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar2

(.E4, 16),

(.rest, 16),

(.rest, 16),

(.C4, 16),

(.rest, 8),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.D4, 16),

(.rest, 16),

(.CS4, 16),

(.C4, 16),

(.rest, 16),

// Bar3

(.C4, 12),

(.G4, 12),

(.B4, 12),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.B4, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.F4, 16),

(.D4, 16),

(.rest, 8),

// Bar4 (2)

(.E4, 16),

(.rest, 16),

(.rest, 16),

(.C4, 16),

(.rest, 8),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.D4, 16),

(.rest, 16),

(.CS4, 16),

(.C4, 16),

(.rest, 16),

// Bar5 (3)

(.C4, 12),

(.G4, 12),

(.B4, 12),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.B4, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.F4, 16),

(.D4, 16),

(.rest, 8),

// Bar6

(.rest, 8),

(.E5, 16),

(.DS5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.E4, 16),

(.F4, 16),

(.G4, 16),

(.rest, 16),

(.C4, 16),

(.E4, 16),

(.F4, 16),

// Bar7

(.rest, 8),

(.E5, 16),

(.DS5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.F5, 16),

(.rest, 16),

(.rest, 8),

// Bar8 (6)

(.rest, 8),

(.E5, 16),

(.DS5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.E4, 16),

(.F4, 16),

(.G4, 16),

(.rest, 16),

(.C4, 16),

(.E4, 16),

(.F4, 16),

// Bar9

(.rest, 8),

(.GS4, 16),

(.rest, 16),

(.rest, 16),

(.F4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar10 (6)

(.rest, 8),

(.E5, 16),

(.DS5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.E4, 16),

(.F4, 16),

(.G4, 16),

(.rest, 16),

(.C4, 16),

(.E4, 16),

(.F4, 16),

// Bar11 (7)

(.rest, 8),

(.E5, 16),

(.DS5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.F5, 16),

(.rest, 16),

(.F5, 16),

(.F5, 16),

(.rest, 16),

(.rest, 8),

// Bar12 (6)

(.rest, 8),

(.E5, 16),

(.DS5, 16),

(.D5, 16),

(.B4, 16),

(.rest, 16),

(.C5, 16),

(.rest, 16),

(.E4, 16),

(.F4, 16),

(.G4, 16),

(.rest, 16),

(.C4, 16),

(.E4, 16),

(.F4, 16),

// Bar13 (9)

(.rest, 8),

(.GS4, 16),

(.rest, 16),

(.rest, 16),

(.F4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.rest, 8),

(.rest, 4),

// Bar14

(.GS4, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.AS4, 16),

(.rest, 16),

(.G4, 16),

(.E4, 16),

(.rest, 16),

(.E4, 16),

(.C4, 16),

(.rest, 16),

(.rest, 8),

// Bar15

(.GS4, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.AS4, 16),

(.G4, 16),

(.rest, 2),

// Bar16 (14)

(.A4, 16),

(.A4, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.A4, 16),

(.AS4, 16),

(.rest, 16),

(.G4, 16),

(.E4, 16),

(.rest, 16),

(.E4, 16),

(.C4, 16),

(.rest, 16),

(.rest, 8),

// Bar17 (1)

(.FS4, 16),

(.FS4, 16),

(.rest, 16),

(.FS4, 16),

(.rest, 16),

(.FS4, 16),

(.FS4, 16),

(.rest, 16),

(.B4, 16),

(.rest, 16),

(.rest, 8),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar18 (2)

(.E4, 16),

(.rest, 16),

(.rest, 16),

(.C4, 16),

(.rest, 8),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.D4, 16),

(.rest, 16),

(.CS4, 16),

(.C4, 16),

(.rest, 16),

// Bar19 (3)

(.C4, 12),

(.G4, 12),

(.B4, 12),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.B4, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.F4, 16),

(.D4, 16),

(.rest, 8),

// Bar20 (2)

(.E4, 16),

(.rest, 16),

(.rest, 16),

(.C4, 16),

(.rest, 8),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.D4, 16),

(.rest, 16),

(.CS4, 16),

(.C4, 16),

(.rest, 16),

// Bar21 (3)

(.C4, 12),

(.G4, 12),

(.B4, 12),

(.C5, 16),

(.rest, 16),

(.A4, 16),

(.B4, 16),

(.rest, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.F4, 16),

(.D4, 16),

(.rest, 8),

// Bar22

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.F4, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.F4, 16),

(.rest, 16),

(.rest, 8),

// Bar23

(.G4, 12),

(.F5, 12),

(.F5, 12),

(.F5, 12),

(.E5, 12),

(.D5, 12),

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.F4, 16),

(.E4, 16),

(.rest, 16),

(.rest, 8),

// Bar24 (22)

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.F4, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.F4, 16),

(.rest, 16),

(.rest, 8),

// Bar25

(.G4, 16),

(.D5, 16),

(.rest, 16),

(.D5, 16),

(.D5, 12),

(.C5, 12),

(.B4, 12),

(.G4, 16),

(.E4, 16),

(.rest, 16),

(.E4, 16),

(.C4, 16),

(.rest, 16),

(.rest, 8),

// Bar26 (22)

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.F4, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.F4, 16),

(.rest, 16),

(.rest, 8),

// Bar27 (23)

(.G4, 12),

(.F5, 12),

(.F5, 12),

(.F5, 12),

(.E5, 12),

(.D5, 12),

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.F4, 16),

(.E4, 16),

(.rest, 16),

(.rest, 8),

// Bar28 (22)

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.F4, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.F4, 16),

(.rest, 16),

(.rest, 8),

// Bar29 (25)

(.G4, 16),

(.D5, 16),

(.rest, 16),

(.D5, 16),

(.D5, 12),

(.C5, 12),

(.B4, 12),

(.G4, 16),

(.E4, 16),

(.rest, 16),

(.E4, 16),

(.C4, 16),

(.rest, 16),

(.rest, 8),

// Bar30 (14)

(.GS4, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.AS4, 16),

(.rest, 16),

(.G4, 16),

(.E4, 16),

(.rest, 16),

(.E4, 16),

(.C4, 16),

(.rest, 16),

(.rest, 8),

// Bar31 (15)

(.GS4, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.AS4, 16),

(.G4, 16),

(.rest, 2),

// Bar32 (14)

(.GS4, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.rest, 16),

(.GS4, 16),

(.AS4, 16),

(.rest, 16),

(.G4, 16),

(.E4, 16),

(.rest, 16),

(.E4, 16),

(.C4, 16),

(.rest, 16),

(.rest, 8),

// Bar33 (1)

(.FS4, 16),

(.FS4, 16),

(.rest, 16),

(.FS4, 16),

(.rest, 16),

(.FS4, 16),

(.FS4, 16),

(.rest, 16),

(.B4, 16),

(.rest, 16),

(.rest, 8),

(.G4, 16),

(.rest, 16),

(.rest, 8),

// Bar34 (22)

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.F4, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.F4, 16),

(.rest, 16),

(.rest, 8),

// Bar35 (23)

(.G4, 12),

(.F5, 12),

(.F5, 12),

(.F5, 12),

(.E5, 12),

(.D5, 12),

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.F4, 16),

(.E4, 16),

(.rest, 16),

(.rest, 8),

// Bar36 (22)

(.C5, 16),

(.A4, 16),

(.rest, 16),

(.E4, 16),

(.rest, 8),

(.E4, 16),

(.rest, 16),

(.F4, 16),

(.C5, 16),

(.rest, 16),

(.C5, 16),

(.F4, 16),

(.rest, 16),

(.rest, 8),

// Bar37 (25)

(.G4, 16),

(.D5, 16),

(.rest, 16),

(.D5, 16),

(.D5, 12),

(.C5, 12),

(.B4, 12),

(.G4, 16),

(.E4, 16),

(.rest, 16),

(.E4, 16),

(.C4, 16),

(.rest, 16),

(.rest, 8),

]

static let track2: [Player.NoteInfo] = [

// Bar1

(.D3, 16),

(.D3, 16),

(.rest, 16),

(.D3, 16),

(.rest, 16),

(.D3, 16),

(.D3, 16),

(.rest, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

(.G3, 16),

(.rest, 16),

(.rest, 8),

// Bar2

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.FS3, 16),

(.F3, 16),

(.rest, 16),

// Bar3

(.E3, 12),

(.C4, 12),

(.E4, 12),

(.F4, 16),

(.rest, 16),

(.D4, 16),

(.E4, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.A3, 16),

(.B3, 16),

(.G3, 16),

(.rest, 8),

// Bar4

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.FS3, 16),

(.F3, 16),

(.rest, 16),

// Bar5

(.E3, 12),

(.C4, 12),

(.E4, 12),

(.F4, 16),

(.rest, 16),

(.D4, 16),

(.E4, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.A3, 16),

(.B3, 16),

(.G3, 16),

(.rest, 8),

// Bar6

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.FS3, 16),

(.F3, 16),

(.rest, 16),

// Bar7

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.G3, 16),

(.C4, 16),

(.rest, 16),

(.G5, 16),

(.rest, 16),

(.G5, 16),

(.G5, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

// Bar8

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.FS3, 16),

(.F3, 16),

(.rest, 16),

// Bar9

(.C3, 16),

(.rest, 16),

(.GS3, 16),

(.rest, 16),

(.rest, 16),

(.AS3, 16),

(.rest, 8),

(.C4, 16),

(.rest, 16),

(.rest, 16),

(.G3, 16),

(.G3, 16),

(.rest, 16),

(.C3, 16),

(.rest, 16),

// Bar10

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.FS3, 16),

(.F3, 16),

(.rest, 16),

// Bar11

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.G3, 16),

(.C4, 16),

(.rest, 16),

(.G5, 16),

(.rest, 16),

(.G5, 16),

(.G5, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

// Bar12

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.FS3, 16),

(.F3, 16),

(.rest, 16),

// Bar13

(.C3, 16),

(.rest, 16),

(.GS3, 16),

(.rest, 16),

(.rest, 16),

(.AS3, 16),

(.rest, 8),

(.C4, 16),

(.rest, 16),

(.rest, 16),

(.G3, 16),

(.G3, 16),

(.rest, 16),

(.C3, 16),

(.rest, 16),

// Bar14

(.FS2, 16),

(.rest, 16),

(.rest, 16),

(.DS3, 16),

(.rest, 8),

(.GS3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C3, 16),

(.rest, 8),

(.F2, 16),

(.rest, 16),

// Bar15

(.FS2, 16),

(.rest, 16),

(.rest, 16),

(.DS3, 16),

(.rest, 8),

(.GS3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C3, 16),

(.rest, 8),

(.F2, 16),

(.rest, 16),

// Bar16

(.FS2, 16),

(.rest, 16),

(.rest, 16),

(.DS3, 16),

(.rest, 8),

(.GS3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C3, 16),

(.rest, 8),

(.F2, 16),

(.rest, 16),

// Bar17

(.D3, 16),

(.D3, 16),

(.rest, 16),

(.D3, 16),

(.rest, 16),

(.D3, 16),

(.D3, 16),

(.rest, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

(.G3, 16),

(.rest, 16),

(.rest, 8),

// Bar18

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.FS3, 16),

(.F3, 16),

(.rest, 16),

// Bar19

(.E3, 12),

(.C4, 12),

(.E4, 12),

(.F4, 16),

(.rest, 16),

(.D4, 16),

(.E4, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.A3, 16),

(.B3, 16),

(.G3, 16),

(.rest, 8),

// Bar20

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.E3, 16),

(.rest, 8),

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.FS3, 16),

(.F3, 16),

(.rest, 16),

// Bar21

(.E3, 12),

(.C4, 12),

(.E4, 12),

(.F4, 16),

(.rest, 16),

(.D4, 16),

(.E4, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.A3, 16),

(.B3, 16),

(.G3, 16),

(.rest, 8),

// Bar22

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.FS3, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.F3, 16),

(.rest, 16),

// Bar23

(.D3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.G3, 16),

(.rest, 16),

(.B3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.G3, 16),

(.rest, 16),

// Bar24

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.FS3, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.F3, 16),

(.rest, 16),

// Bar25

(.G3, 16),

(.G3, 16),

(.rest, 16),

(.G3, 16),

(.G3, 12),

(.A3, 12),

(.B3, 12),

(.C4, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.C3, 16),

(.rest, 16),

(.rest, 8),

// Bar26

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.FS3, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.F3, 16),

(.rest, 16),

// Bar27

(.D3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.G3, 16),

(.rest, 16),

(.B3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.G3, 16),

(.rest, 16),

// Bar28

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.FS3, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.F3, 16),

(.rest, 16),

// Bar29

(.G3, 16),

(.G3, 16),

(.rest, 16),

(.G3, 16),

(.G3, 12),

(.A3, 12),

(.B3, 12),

(.C4, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.C3, 16),

(.rest, 16),

(.rest, 8),

// Bar30

(.FS2, 16),

(.rest, 16),

(.rest, 16),

(.DS3, 16),

(.rest, 8),

(.GS3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C3, 16),

(.rest, 8),

(.F2, 16),

(.rest, 16),

// Bar31

(.FS2, 16),

(.rest, 16),

(.rest, 16),

(.DS3, 16),

(.rest, 8),

(.GS3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C3, 16),

(.rest, 8),

(.F2, 16),

(.rest, 16),

// Bar32

(.FS2, 16),

(.rest, 16),

(.rest, 16),

(.DS3, 16),

(.rest, 8),

(.GS3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.rest, 16),

(.C3, 16),

(.rest, 8),

(.F2, 16),

(.rest, 16),

// Bar33

(.D3, 16),

(.D3, 16),

(.rest, 16),

(.D3, 16),

(.rest, 16),

(.D3, 16),

(.D3, 16),

(.rest, 16),

(.G4, 16),

(.rest, 16),

(.rest, 8),

(.G3, 16),

(.rest, 16),

(.rest, 8),

// Bar34

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.FS3, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.F3, 16),

(.rest, 16),

// Bar35

(.D3, 16),

(.rest, 16),

(.rest, 16),

(.F3, 16),

(.G3, 16),

(.rest, 16),

(.B3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.G3, 16),

(.rest, 16),

// Bar36

(.C3, 16),

(.rest, 16),

(.rest, 16),

(.FS3, 16),

(.G3, 16),

(.rest, 16),

(.C4, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.F3, 16),

(.rest, 16),

(.C4, 16),

(.C4, 16),

(.F3, 16),

(.rest, 16),

// Bar37

(.G3, 16),

(.G3, 16),

(.rest, 16),

(.G3, 16),

(.G3, 12),

(.A3, 12),

(.B3, 12),

(.C4, 16),

(.rest, 16),

(.G3, 16),

(.rest, 16),

(.C3, 16),

(.rest, 16),

(.rest, 8),

]

static let tracks = [track0, track1, track2]

static let trackWaveforms: [Waveform] = [.square, .square, .triangle]

// The sound generated by square wave is much louder than that of triangle wave.

// Besides, the $0.3 speaker in your kit doesn't have a good low-frequency response.

// You can adjust the amplitude ratio to make it sound better.

static let amplitudeRatios: [Float] = [0.1, 0.1, 1]

static let bpm = 105

static let timeSignature = (4, 4)

}

🔸API

I2S

This class sends audio data to external devices using I2S protocol.

init(_ idName: IdName, rate: Int = 16_000, bits: Int = 16, mode: Mode = .philips, timeout: Int = Int(SWIFT_FOREVER))

Initialize an I2S output interface for audio devices.

Parameter:

idName: the specified I2S pin.rate: the sample rate of the audio. The default rate is 16000 Hz.bits: the sample depth of the audio, 16-bit by default.mode: define when the data is sent. The.philipsis the default mode.timeout: wait time for data transmission. Wait until finished by default.

func write( _ data: [UInt8], count: Int? = nil) -> Result<Int, Errno>

Send audio data out to devices.

Parameter:

data: the audio data stored in a UInt8 array.count: the count of data to be sent. If nil, it equals the count of elements in thesample.

Return value:

- The result of the data transmission indicating whether it succeeds or not.

🔸Background

What is I2S?

I2S (inter-integrated circuit sound) is a serial communication protocol that is designed specifically for digital audio data. It provides a simple and efficient method of transmitting high-quality audio data between devices.

In the context of the I2S protocol, a word refers to a group of bits that are transmitted together as a single unit. It typically consists of one left-channel and one right-channel audio sample, each represented by a fixed number of bits. The word length depends on the bit depth used and can range from 16 bits to 32 bits or more.

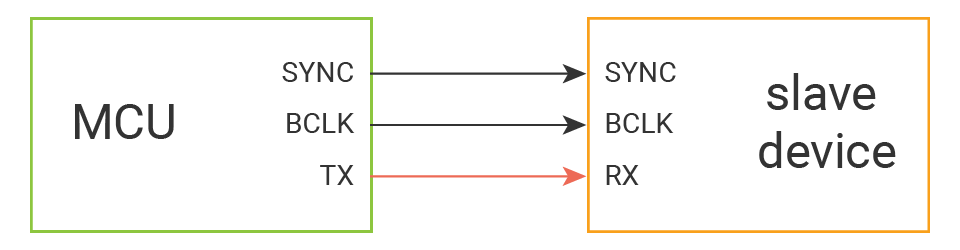

I2S uses three signals for data transmission:

- SCK (Serial clock): or Bit Clock (BCLK), it carries the clock signal generated by the master device. It synchronizes the data transmission between the master and slave devices. Its clock frequency equals Sample Rate x Bits per channel x Number of channels.

- FS (Frame Sync): or Word Select (WS), it indicates which audio channel is being transmitted. That's because the left and right channel audio samples are typically transmitted together as a single word.

- SD (Serial data): it is used to transfer audio data.

To understand the data format used in the I2S protocol, you should have a good understanding of audio sampling concepts, which will be discussed below.

Peripheral - I2S

An I2S peripheral on an MCU is a hardware module that implements the I2S protocol for interfacing with digital audio devices.

The I2S peripheral on MCU usually has several registers that can be configured to control various aspects of the I2S operation, such as the clock frequency, word length, data alignment, and channel configuration. To use the peripheral, you would typically need to initialize the I2S peripheral and configures it for the desired audio format and operation. Once the I2S peripheral is configured, you can use it to send or receive digital audio data to or from external audio devices.

The SwiftIO Micro always serves as a master device. It provides two I2S interfaces:

- one is used to send digital audio data from the MCU to other audio devices, such as a digital-to-analog converter (DAC) or an audio amplifier. In this case, the serial data line is TX, used to send data.

- the other is used to receive digital audio data from an external audio device, such as a microphone. The corresponding serial data line (RX) only receives audio data.

🔸New concept

You listen to music in everyday life, but do you know how audio is stored on your computer? Besides, there are so many different file formats, what are their difference? Let's find out more about it. BTW, we strongly recommend you to read this detailed article about sound.

Waveform

The waveform of a sound refers to the shape of the sound wave as it oscillates over time. Different types of waveforms produce different tonal qualities, and they can be combined in various ways to create complex and interesting sounds.

Sine, square, triangle, and sawtooth waves are four common waveforms used in signal processing and music synthesis. You could go to this article to hear the sound generated by these waveforms.

The sine wave is the simplest and most fundamental waveform. It represents a single frequency without any harmonic content. The other waveforms can be expressed as a sum of sine waves of different frequencies and amplitudes using Fourier transform.

BTW, synthesizers use various waveforms and manipulate them through operations such as frequency modulation, amplitude modulation, and filtering, to create complex and unique sounds.

The waveforms discussed here are periodic, which means that they repeat themselves over time. They can be repeated at regular intervals to create a consistent pitch or tone.

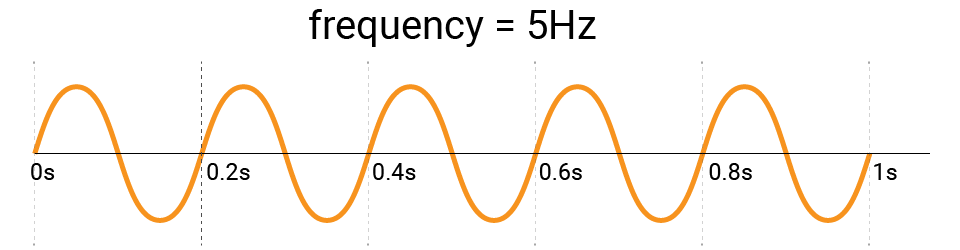

The frequency of a wave is a measure of how many cycles (repetitions) the wave completes in one second and is measured in hertz (Hz). For example, the wave below repeats the minimum wave 5 times, so the frequency is 5Hz. The higher the frequency, the higher the pitch. The frequencies of human hearing are about 20Hz to 20kHz.

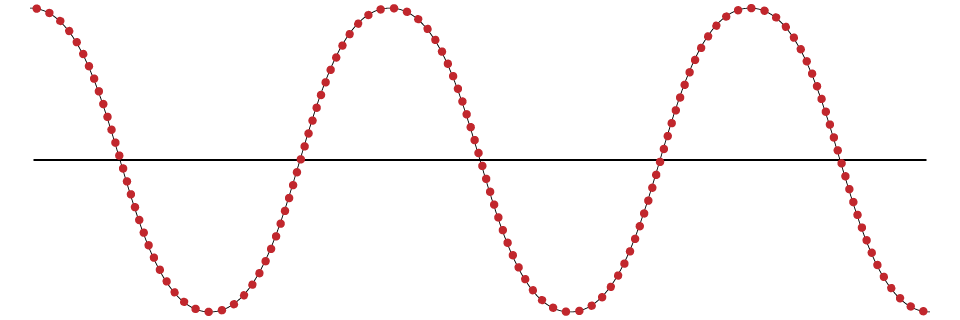

Audio sampling

The audio signal is analog and the data always change with time. Audio sampling is the process of converting continuous analog audio signals into a discrete digital format that can be stored and manipulated digitally.

There are some sampling techniques to store the data digitally. Pulse Code Modulation (PCM) is a popular method for digitally encoding analog signals. In PCM, the amplitude of the analog signal is sampled at regular intervals and then quantized into a digital value using a specified bit depth. As long as the sample data are as many as possible, you could largely recreate the original audio signal.

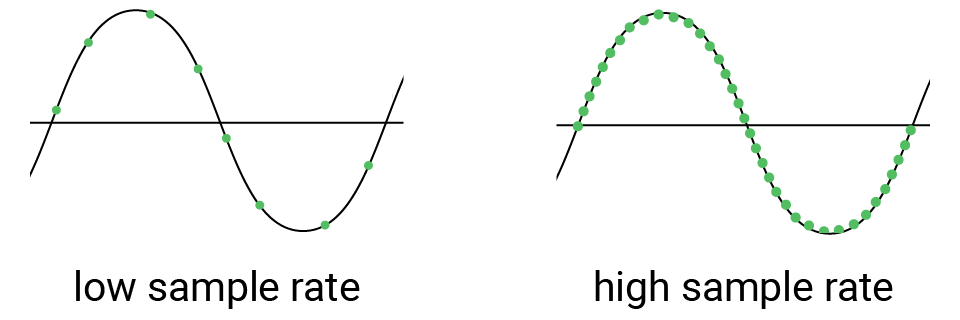

❖ Sample rate

Sample rate describes how many times the signal is sampled in one second, measured in hertz (hz).

There is a known law about the sample rate: Nyquist rate. It states that in order to accurately represent an analog signal in digital form, the sampling rate should be at least twice the highest frequency of the original signal. If the sampling rate is too low, some of the higher frequency components of the original signal will be lost or distorted in the digital representation, leading to a degraded signal.

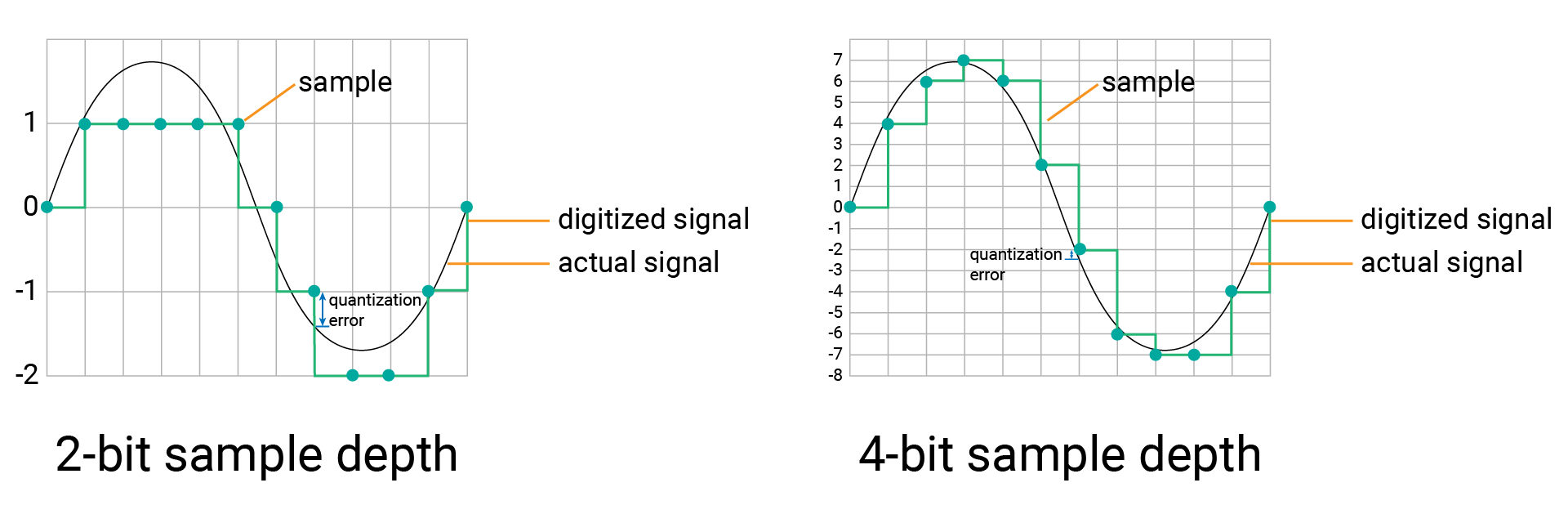

❖ Sample depth

Sample depth, also known as bit depth, refers to the number of bits of information used to represent each sample in a digital audio signal. A higher sample depth means that each sample can be represented with more precision, which can result in a more accurate representation of the original sound wave. For example, a 16-bit sample depth can represent 216, or 65,536, different possible values for each sample.

❖ CD-quality audio

As mentioned before, the maximum frequency for human hearing is about 20KHz, the sample rate should be double to recreate sounds, that is, about 40KHz. The CD audio is usually sampled at 44,100Hz, which is above the maximum frequency for human hearing and allows for accurate reproduction of most sounds that humans can hear.

CD-quality audio typically has a sample depth of 16 bits. This provides a high level of precision and allows for an accurate representation of the original analog audio signal.

It also typically uses two channels (left and right), also known as stereo sound, which allows for a more immersive listening experience.

Audio format

Maybe you have heard of some audio file formats: MP3, WAV, AAC, FLAC, and so on. These formats can be divided into two types: lossless and lossy file format. The difference is the way audio data is stored.

Lossless audio formats like WAV and FLAC preserve all the original audio data, so they offer the best possible quality for playback and editing. However, this also means that they require a lot of storage space.

Lossy audio formats like MP3 and AAC use compression to reduce the file size. The degree of compression can be adjusted to balance the file size and audio quality, with higher compression resulting in smaller file sizes but lower audio quality. They can be easily streamed or downloaded over the internet, which is why they are often used for online music services.

Let's dive into two frequently-used formats as an example:

❖ WAV

WAV (or WAVE) files store the raw PCM data. It also includes a header to provide information about the format of the audio data, such as the sample rate, bit depth, and the number of channels, which allows other programs or devices to correctly interpret the PCM data.

While WAV files can be quite large in file size due to their uncompressed format, they are widely supported by a variety of audio software and hardware devices, and can be played back on almost any platform without the need for additional software or processing.

❖ MP3

MP3 files are compressed using a specific algorithm that selectively removes certain frequencies that are deemed to be less important or less perceptible to human ears. This loss is typically not perceptible to most listeners, especially when using headphones or other consumer-grade audio equipment.

They are widely used for distributing and playing music over the internet, due to their small file size and good balance between file size and audio quality. Because it uses compression algorithms, the music player needs to first decode the compressed data and convert it back into an audio waveform that can be played through speakers or headphones.

🔸New component

Speaker

Speakers are designed to produce high-quality sound for listening to music, movies, or other types of audio content. They typically have a wide frequency response, which allows them to accurately reproduce a full range of sounds from deep bass to high treble.

❖ How does speaker generate sound?

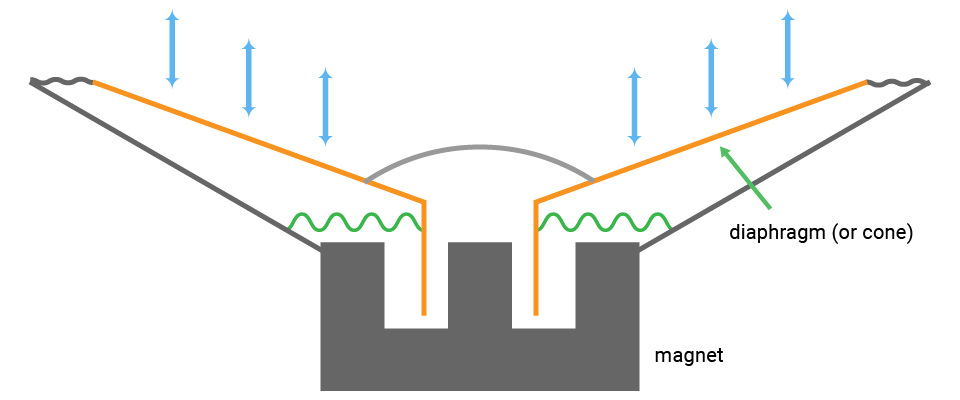

In general, the working principle of the speaker is similar to the buzzer. When the current flows, the magnet field generated in the circuit cause the internal diaphragm to move back and forth, pushing air molecules and creating sound waves.

Symbol:

The diaphragm in a buzzer is typically limited to moving back and forth between predetermined positions. By varying the frequency of the electrical signal, the buzzer can produce different tones or sounds, but it is still limited by the predetermined positions of the diaphragm.

On the other hand, in a speaker, the diaphragm is designed to move to different positions in response to the electrical signal it receives. This allows it to reproduce a wide range of frequencies, from deep bass tones to high treble notes, and produce complex sounds and music. The frequency and amplitude of the electrical signal determine the frequency and volume of the sound produced by the speaker, and these parameters directly affect the position and speed of the diaphragm.

❖ How does electrical signal affect the sound from speaker?

The frequency of the electrical signal directly determines the frequency of the sound or the pitch that is produced by the speaker, as it determines the frequency at which the diaphragm moves back and forth.

Speakers have their own frequency range, which refers to the range of audio frequencies that they can produce. The frequency range of a speaker is typically described in terms of its lower and upper frequency limits, and the range in between is known as the frequency response.

If the frequency of the electrical signal is too low, the diaphragm may not move enough to produce any sound, or the sound may be very weak and distorted. If the frequency is too high, the diaphragm may not be able to keep up with the rapid oscillations, and again the sound produced may be distorted.

The amplitude of the signal, on the other hand, determines the volume or loudness of the sound produced by the speaker. The greater the amplitude of the electrical signal, the greater the displacement of the diaphragm and the louder the sound that is produced.

As mentioned before, the waveform of the signal applied to a speaker has a direct impact on the tonal quality or timbre of the sound that is produced.

At last, the prices of speakers can vary widely depending on several factors such as quality, features, size, materials used, and the intended use. It's generally true that cheaper speakers may not be as good at reproducing low frequencies as more expensive speakers. So does the speaker on the SwiftIO Playground.

MAX98357

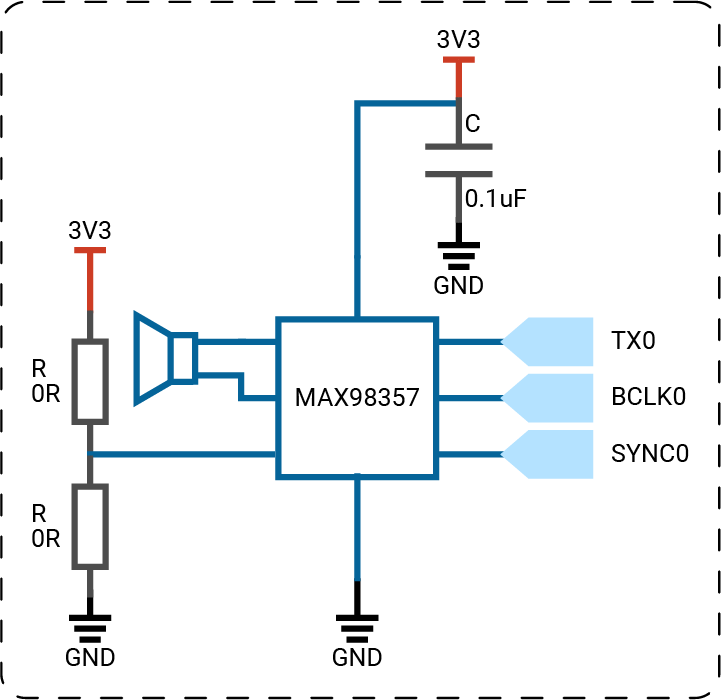

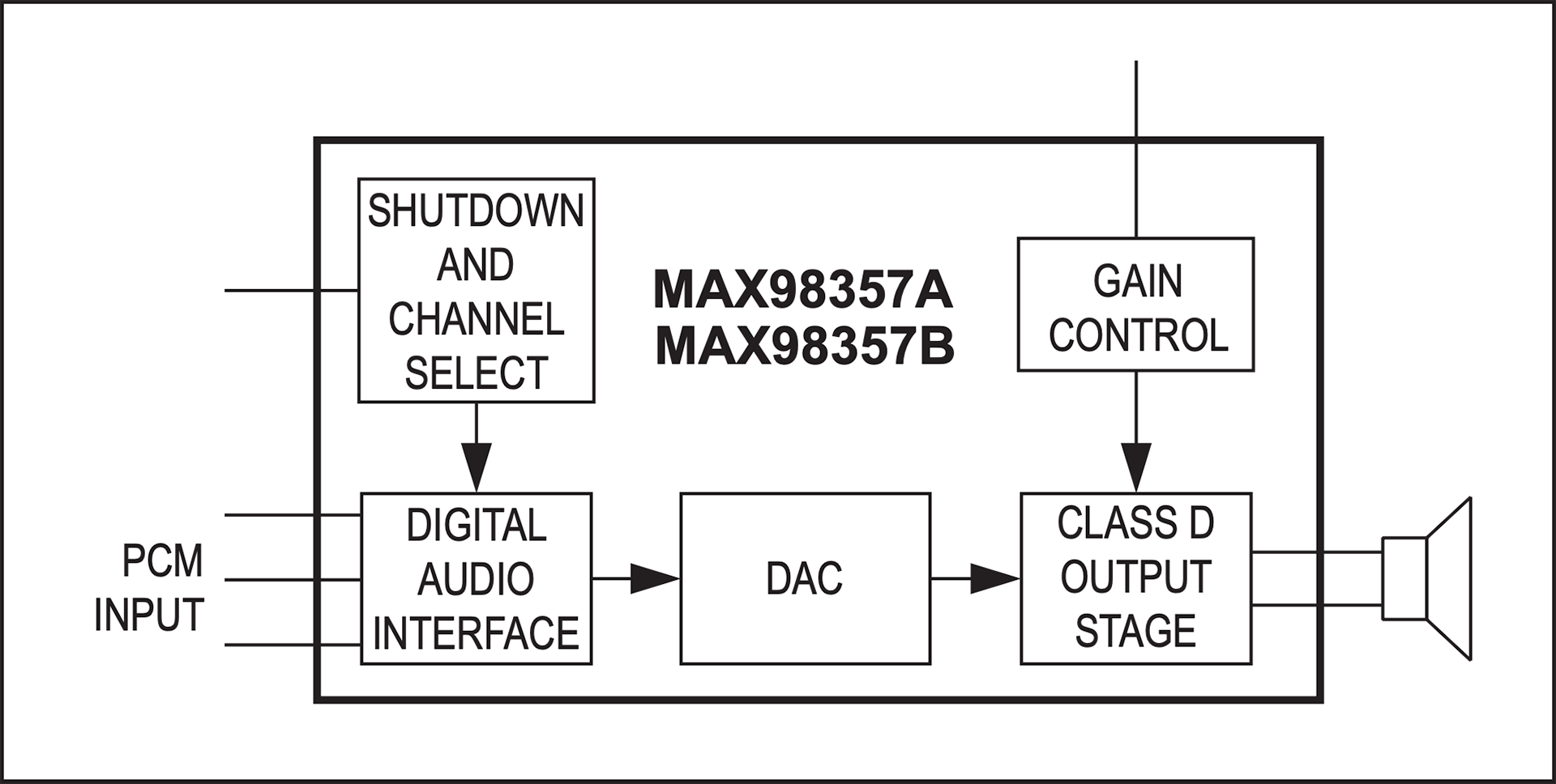

The signal from an I2S interface cannot be directly used to drive a speaker since it is a digital signal that needs to be converted before being played through a speaker.

MAX98357 is a fully integrated Class D amplifier with an integrated digital-to-analog converter (DAC). The DAC converts the digital audio signal to an analog voltage signal, which can then be amplified by an amplifier and played through a speaker.

❖ How does MAX98357 work with speaker?

- PCM audio data is sent using I2S to MAX98357.

- MAX98357 receives the digital audio data.

- The digital audio data is converted to an analog audio signal by the internal DAC of the MAX98357.

- The analog audio signal is then passed through the built-in amplifier of the MAX98357 to amplify it to a level that is sufficient to drive a speaker.

- The amplified audio signal is passed through an output low-pass filter to remove any unwanted high-frequency noise and produce the final amplified analog audio signal.

- The final amplified analog audio signal is sent to the speaker to produce sound.

❖ Channel select

MAX98357 is designed to accept stereo audio input and can output audio with the left channel, right channel, or both channels combined.

Stereo audio is designed to be played back through two separate speakers, one for the left channel and one for the right channel. However, in some applications where only one speaker is available, the left channel is often used as the default channel for mono audio.

Therefore, it is configured to only output left channel data when designing the circuit. It will take the audio data for the left channel while discarding the audio data for the right channel.

❖ Gain setting

Besides, MAX98357 provides several gain settings to allow you to adjust the amplification level of the audio signal. The default gain setting is 9dB.

In case you want to change the gain setting, there are three solder pads on the back of the speaker module. By soldering a 0 ohm resistor/jumper wire between pads 6dB and 9dB, the gain is 6dB; by soldering 9dB and 12dB, the gain is 12dB.